Webmaster Level: Intermediate

The Google Webmaster Tools Index Status feature reports how many pages on your site are indexed by Google. In the past, we didn’t show index status data for HTTPS websites independently, but rather we included everything in the HTTP site’s report. In the last months, we’ve heard from you that you’d like to use Webmaster Tools to track your indexed URLs for sections of your website, including the parts that use HTTPS.

We’ve seen that nearly 10% of all URLs already use a secure connection to transfer data via HTTPS, and we hope to see more webmasters move their websites from HTTP to HTTPS in the future. We’re happy to announce a refinement in the way your site’s index status data is displayed in Webmaster Tools: the Index Status feature now tracks your site’s indexed URLs for each protocol (HTTP and HTTPS) as well as for verified subdirectories.

This makes it easy for you to monitor different sections of your site. For example, the following URLs each show their own data in Webmaster Tools Index Status report, provided they are verified separately:

The refined data will be visible for webmasters whose site’s URLs are on HTTPS or who have subdirectories verified, such as https://example.com/folder/. Data for subdirectories will be included in the higher-level verified sites on the same hostname and protocol.

If you have a website on HTTPS or if some of your content is indexed under different subdomains, you will see a change in the corresponding Index Status reports. The screenshots below illustrate the changes that you may see on your HTTP and HTTPS sites’ Index Status graphs for instance:

HTTP site’s Index Status showing drop

HTTPS site’s Index Status showing increase

An “Update” annotation has been added to the Index Status graph for March 9th, showing when we started collecting this data. This change does not affect the way we index your URLs, nor does it have an impact on the overall number of URLs indexed on your domain. It is a change that only affects the reporting of data in Webmaster Tools user interface.

In order to see your data correctly, you will need to

verify all existing variants of your site (www., non-www., HTTPS, subdirectories, subdomains) in Google Webmaster Tools. We recommend that your preferred domains and

canonical URLs are configured accordingly.

Note that if you wish to

submit a Sitemap, you will need to do so for the preferred variant of your website, using the corresponding URLs.

Robots.txt files are also read separately for each protocol and hostname.

We hope that you’ll find this update useful, and that it’ll help you monitor, identify and fix indexing problems with your website. You can find additional details in our Index Status

Help Center article. As usual, if you have any questions, don’t hesitate to ask in our webmaster

Help Forum.

Posted by Zineb Ait Bahajji, WTA, thanks to the Webmaster Tools team.

Webmaster level: all

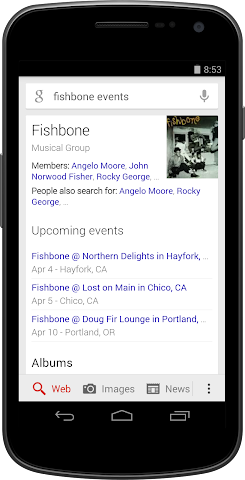

When music lovers search for their favorite band on Google, we often show them a Knowledge Graph panel with lots of information about the band, including the band’s upcoming concert schedule. It’s important to fans and artists alike that this schedule be accurate and complete. That’s why we’re trying a new approach to concert listings. In our new approach, all concert information for an artist comes directly from that artist’s official website when they add structured data markup.

If you’re the webmaster for a musical artist’s official website, you have several choices for how to participate:

- You can implement schema.org markup on your site. That’s easier than ever, since we’re supporting the new JSON-LD format (alongside RDFa and microdata) for this feature.

- Even easier, you can install an events widget that has structured data markup built in, such as Bandsintown, BandPage, ReverbNation, Songkick, or GigPress.

- You can label the site’s events with your mouse using Google’s point-and-click webmaster tool: Data Highlighter.

All these options are explained in detail in our Help Center. If you have any questions, feel free to ask in our Webmaster Help forums. So don’t you worry `bout a schema.org/Thing … just mark up your site’s events and let the good schema.org/Times roll!

Posted by Justin Boyan, Product Manager, Google Search

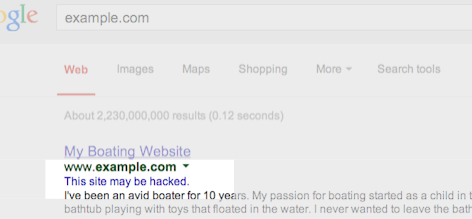

Google shows this message in search results for sites that we believe may have been compromised.You might not think your site is a target for hackers, but it’s surprisingly common. Hackers target large numbers of sites all over the web in order to exploit the sites’ users or reputation.

One common way hackers take advantage of vulnerable sites is by adding spammy pages. These spammy pages are then used for various purposes, such as redirecting users to undesired or harmful destinations. For example, we’ve recently seen an increase in hacked sites redirecting users to fake online shopping sites.

Once you recognize that your website may have been hacked, it’s important to diagnose and fix the problem as soon as possible. We want webmasters to keep their sites secure in order to protect users from spammy or harmful content.

3 tips to help you find hacked content on your site

-

Check your site for suspicious URLs or directories

Keep an eye out for any suspicious activity on your site by performing a “site:” search of your site in Google, such as [site:example.com]. Are there any suspicious URLs or directories that you do not recognize?

You can also set up a Google Alert for your site. For example, if you set a Google Alert for [site:example.com (viagra|cialis|casino|payday loans)], you’ll receive an email when these keywords are detected on your site.

-

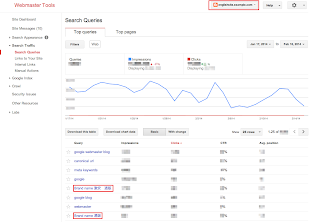

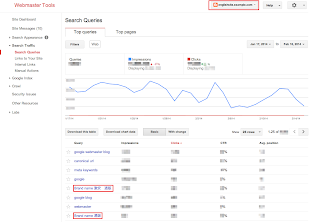

Look for unnatural queries on the Search Queries page in Webmaster Tools

The Search Queries page shows Google Web Search queries that have returned URLs from your site. Look for unexpected queries as it can be an indication of hacked content on your site.

Don’t be quick to dismiss queries in different languages. This may be the result of spammy pages in other languages placed on your website.

|

| Example of an English site hacked with Japanese content. |

-

Enable email forwarding in Webmaster Tools

Google will send you a message if we detect that your site may be compromised. Messages appear in Webmaster Tools’ Message Center but it’s a best practice to also forward these messages to your email. Keep in mind that Google won’t be able to detect all kinds of hacked content, but we hope our notifications will help you catch things you may have missed.

Tips to fix and prevent hacking

-

Stay informed

The Security Issues section in Webmaster Tools will show you hacked pages that we detected on your site. We also provide detailed information to help you fix your hacked site. Make sure to read through this documentation so you can quickly and effectively fix your site.

-

Protect your site from potential attacks

It’s better to prevent sites from being hacked than to clean up hacked content. Hackers will often take advantage of security vulnerabilities on commonly used website management software. Here are some tips to keep your site safe from hackers:

- Always keep the software that runs your website up-to-date.

- If your website management software tools offer security announcements, sign up to get the latest updates.

- If the software for your website is managed by your hosting provider, try to choose a provider that you can trust to maintain the security of your site.

We hope this post makes it easier for you to identify, fix, and prevent hacked spam on your site. If you have any questions, feel free to post in the comments, or drop by the Google Webmaster Help Forum.

If you find suspicious sites in Google search results, please report them using the Spam Report tool.

Posted by Megumi Hitomi, Japanese Search Quality Team

Webmaster level: All

Our quality guidelines warn against running a site with thin or scraped content without adding substantial added value to the user. Recently, we’ve seen this behavior on many video sites, particularly in the adult industry, but also elsewhere. These sites display content provided by an affiliate program—the same content that is available across hundreds or even thousands of other sites.

If your site syndicates content that’s available elsewhere, a good question to ask is: “Does this site provide significant added benefits that would make a user want to visit this site in search results instead of the original source of the content?” If the answer is “No,” the site may frustrate searchers and violate our quality guidelines. As with any violation of our quality guidelines, we may take action, including removal from our index, in order to maintain the quality of our users’ search results. If you have any questions about our guidelines, you can ask them in our Webmaster Help Forum.

Posted by Chris Nelson, Search Quality Team

Webmaster level: intermediate-advancedIn the past, we have seen occasional confusion by webmasters regarding how crawl errors on redirecting pages were shown in Webmaster Tools. It’s time to make this a bit clearer and easier to diagnose! While it used…

Webmaster level: intermediateTo help jump-start your year and make metrics for your site more actionable, we’ve updated one of the most popular features in Webmaster Tools: data in the search queries feature will no longer be rounded / bucketed. This c…

Webmaster Level: All

Search Queries in Webmaster Tools just became more cohesive for those who manage a mobile site on a separate URL from desktop, such as mobile on m.example.com and desktop on www. In Search Queries, when you view your m. site* and set Filters to “Mobile,” from Dec 31, 2013 onwards, you’ll now see:

- Queries where your m. pages appeared in search results for mobile browsers

- Queries where Google applied Skip Redirect. This means that, while search results displayed the desktop URL, the user was automatically directed to the corresponding m. version of the URL (thus saving the user from latency of a server-side redirect).

Skip Redirect information (impressions, clicks, etc.) calculated with mobile site.

Skip Redirect information (impressions, clicks, etc.) calculated with mobile site.Prior to this Search Queries improvement, Webmaster Tools reported Skip Redirect impressions with the desktop URL. Now we’ve consolidated information when Skip Redirect is triggered, so that impressions, clicks, and CTR are calculated solely with the verified m. site, making your mobile statistics more understandable.

Best practices if you have a separate m. site

Here are a few search-friendly recommendations for those publishing content on a separate m. site:

- Follow our advice on Building Smartphone-Optimized Websites

- On the desktop page, add a special link rel=”alternate” tag pointing to the corresponding mobile URL. This helps Googlebot discover the location of your site’s mobile pages.

- On the mobile page, add a link rel=”canonical” tag pointing to the corresponding desktop URL.

- Use the

HTTP Vary: User-Agent header if your servers automatically redirect users based on their user agent/device.

- Verify ownership of both the desktop (www) and mobile (m.) sites in Webmaster Tools for improved communication and troubleshooting information specific to each site.

* Be sure you’ve verified ownership for your mobile site!

Written by Maile Ohye, Developer Programs Tech Lead

Now that 2013 is almost over, we’d love to take a quick look back, and venture a glimpse into the future. Some of the important topics on our blog from 2013 were around mobile, internationalization, and search quality in general. Here are some of the m…

Webmaster level: allContent on the Internet changes or disappears, and occasionally it’s helpful to have search results for it updated quickly. Today we launched our improved public URL removal tool to make it easier to request updates based on changes…

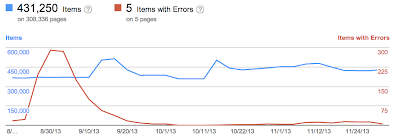

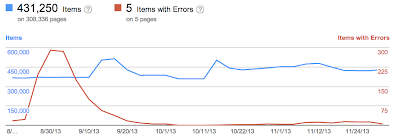

Since we launched the Structured Data dashboard last year, it has quickly become one of the most popular features in Webmaster Tools. We’ve been working to expand it and make it even easier to debug issues so that you can see how Google understands the marked-up content on your site.

Starting today, you can see items with errors in the Structured Data dashboard. This new feature is a result of a collaboration with webmasters, whom we invited in June to>register as early testers of markup error reporting in Webmaster Tools. We’ve incorporated their feedback to improve the functionality of the Structured Data dashboard.

An “item” here represents one top-level structured data element (nested items are not counted) tagged in the HTML code. They are grouped by data type and ordered by number of errors:

We’ve added a separate scale for the errors on the right side of the graph in the dashboard, so you can compare items and errors over time. This can be useful to spot connections between changes you may have made on your site and markup errors that are appearing (or disappearing!).

Our data pipelines have also been updated for more comprehensive reporting, so you may initially see fewer data points in the chronological graph.

How to debug markup implementation errors

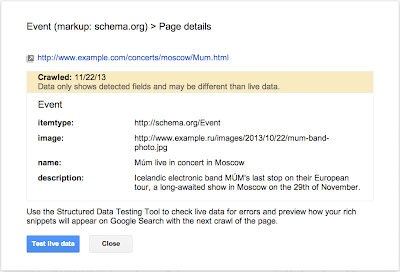

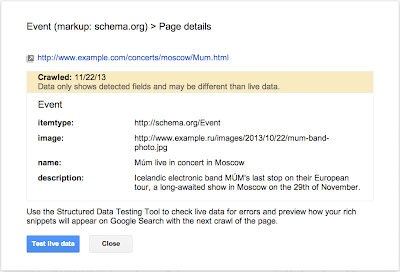

- To investigate an issue with a specific content type, click on it and we’ll show you the markup errors we’ve found for that type. You can see all of them at once, or filter by error type using the tabs at the top:

- Check to see if the markup meets the implementation guidelines for each content type. In our example case (events markup), some of the items are missing a

startDate or name property. We also surface missing properties for nested content types (e.g. a review item inside a product item) — in this case, this is the lowprice property.

- Click on URLs in the table to see details about what markup we’ve detected when we crawled the page last and what’s missing. You’ll can also use the “Test live data” button to test your markup in the Structured Data Testing Tool. Often when checking a bunch of URLs, you’re likely to spot a common issue that you can solve with a single change (e.g. by adjusting a setting or template in your content management system).

- Fix the issues and test the new implementation in the Structured Data Testing Tool. After the pages are recrawled and reprocessed, the changes will be reflected in the Structured Data dashboard.

We hope this new feature helps you manage the structured data markup on your site better. We will continue to add more error types in the coming months. Meanwhile, we look forward to your comments and questions here or in the dedicated Structured Data section of the Webmaster Help forum.

Posted by Mariya Moeva, Webmaster Trends Analyst