Scraping Schema Markup for Competitive Intelligence

Structured mark up is an important for e-commerce websites in the event that they want to stand out in the SERPs. Because e-commerce websites are usually set as much as scale, scraping all of their knowledge is so easy. All it takes is a Screaming Frog crawl and Outwit Hub.

For dropshippers and affiliate websites, harvesting competitor knowledge within schema mark up tags can be extraordinarily helpful. In case you are promoting the identical products as your competitors, that you would be able to evaluate pricing, product descriptions, calls to motion/unique promotions – anything else – and analyze how you stack up towards your rivals.

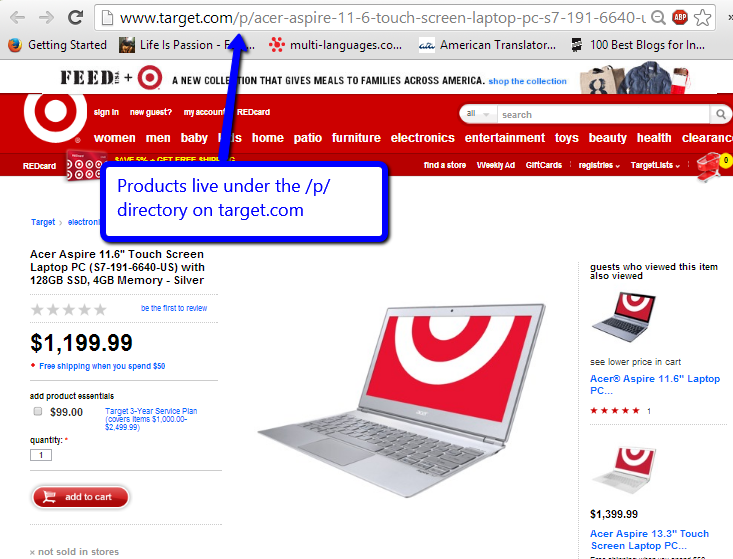

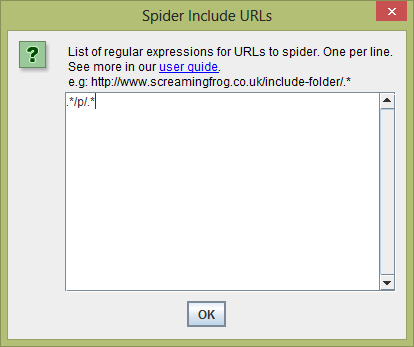

Before we are able to begin, we want to figure out where products continue to exist the competitor web site. In case your competitor has naturally built out data architecture, it shouldn’t be too tough. On Target.com, they use the directory /p/ for their merchandise.

Step 1) Crawl and Gather Product Pages

So as to get the pages that are living underneath the /p/ listing, fan the flames of Screaming Frog and beneath Configuration > Include, add .*/p/.*

Now your Screaming Frog export will handiest include product pages

So everyone can observe alongside and work with the same information, I’ve long gone ahead and scraped all of the laptops which can be presently listed on the Target.com site, which you could get right here:

Listing of Goal Laptops (09/10/2013)

Step 2) Analyze Structured Markup and On Page Components

Take one of the most product pages from your Screaming Frog Export, for this case, we’ll use the Acer Aspire 11.6″ Contact Display Computer PC page. Should you enter the URL into the Rich Snippet Testing Software you can find that Goal is using a ton of structured markup on their product pages.

For this, exercise, we’re going to scrape:

- Price

- SKU

- Product Identify

- Battery Cost Life (non-schema part)

- Name to motion/Promoting (non-schema component)

Step 3) Stir up OutWit Hub

Outwit Hub is a personal computer scraper/information harvester. It costs $60 a yr and is neatly value it. Outwit can utilize cookies, so scraping behind a pay-wall or password safe website online is a non-problem. As an alternative of getting to make use of Xpath to scrape information, Outwit Hub allows you to highlight the supply code and set markers to scrape the whole lot that lies in between. If you are no longer a technical marketer, and you find yourself having to assemble a variety of data/wasting your time – this is a good software to have for your arsenal.

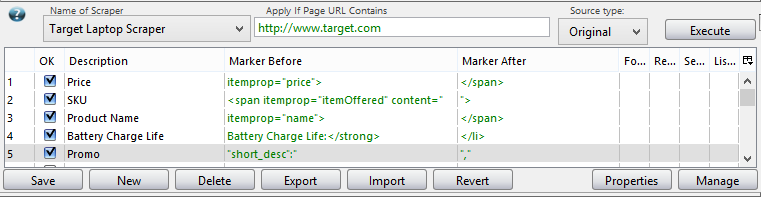

Step 4) Build Your Scraper

This can be intimidating in the beginning, but it surely’s a lot extra scalable then trying to use Excel or Google medical Doctors to scrape hundreds and hundreds of data points

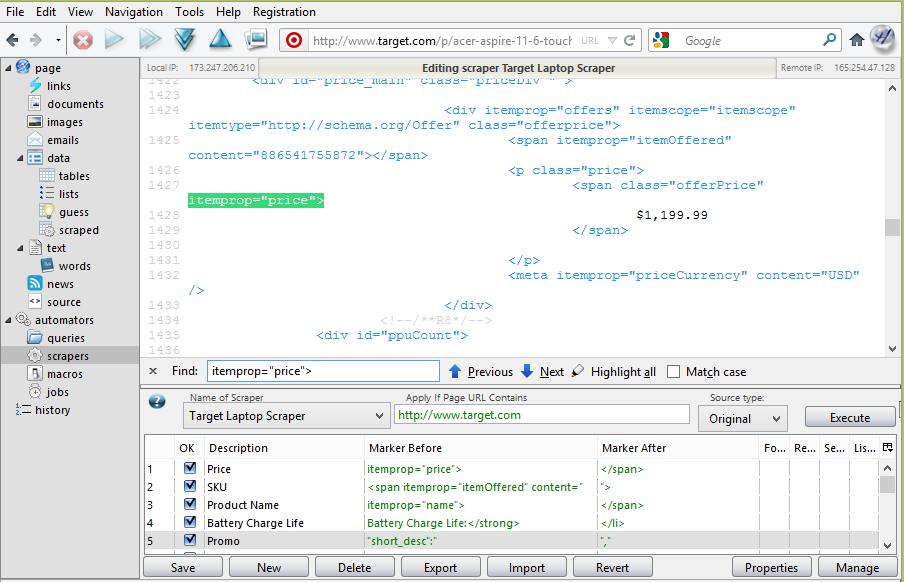

In the proper-hand menu, click on on Scrapers. Enter the instance Target URL. This may load the supply code.

Click on the “New” Button on the lower component to the monitor and name your scraper. I’m calling mine, “Goal Computer Scraper.”

withIn the search field, start entering within the markup for the schema tags you wish to have to scrape for. Remember this isn’t Xpath, you don’t wish to worry in regards to the DOM, you handiest want to figure out what distinctive supply code goes prior to the part (the schema tag) and what’s after it.

Extreme Close Up!

It’ll take some practice at first, however once you get the cling of it, it is going to most effective take a few minutes to set up a customized scraper.

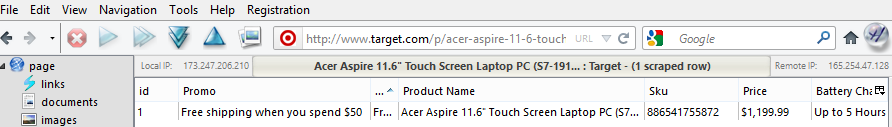

Step 5) Test Your Scraper

While you’re executed entering in the markers for the data you want to assemble, hit the execute button and test your outcomes. You must see one thing like this:

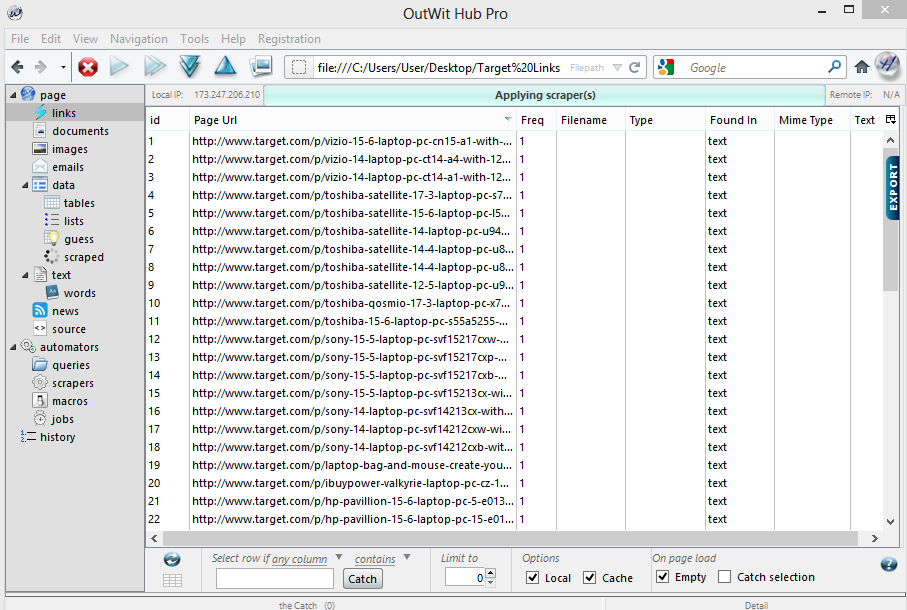

Step 6) Put the checklist of URLs right into a .txt file and save it.

Any of those storage units or your local computing device will do

Step 7) Open the .txt file in Outwit using the file menu

If you happen to go to the left navigation, just below the main listing, there’s a subdirectory known as “Links.” Click on on Links in the left-hand nav. This is what you should see:

Choose the entire knowledge using Regulate+A and then proper click on the row with the entire URLs.

Step 7) Fast Scrape!

In the right click on menu, make a choice: Auto-Explore >Quick Scrape (Embrace Selected Knowledge) > And make a choice the scraper we just built collectively.

right Here’s a video of the closing step in Outwit

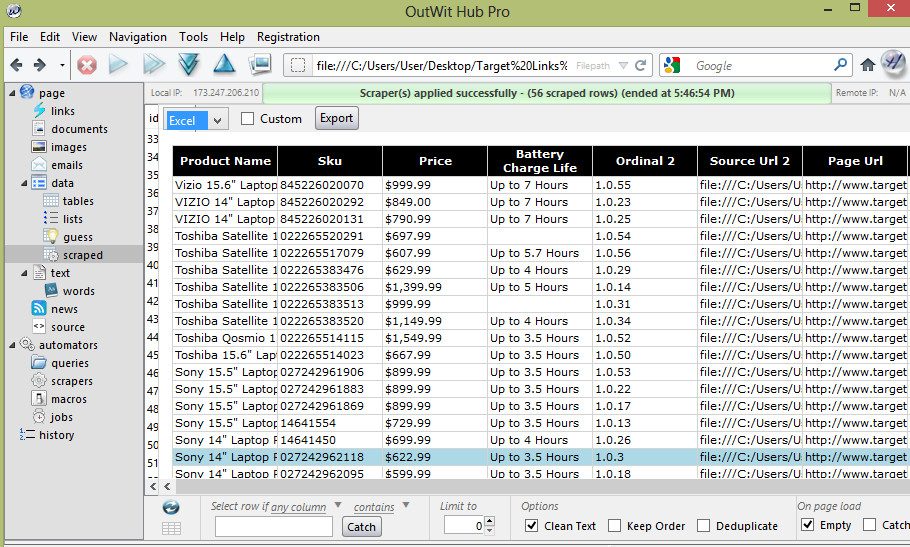

Step eight) Bask within the glory of your competitor’s knowledge

withIn the left-hand navigation, there is a class referred to as “knowledge”, with the subcategory “scraped” – simply if you happen to navigated faraway from it, that’s the place your entire information might be saved, simply be careful to not load a brand new URL in Outwit Hub or else it’s going to be written over and you’ll have to scrape all over once more.

You could export your data into HTML, TXT, CSV, SQL or Excel. I most often simply go for an Excel export and do a VLOOKUP to combine the information with the unique Screaming Frog crawl from the 1st step in Excel.

Bought any fun doable use cases?

Share them beneath in the feedback!

Image source by means of Flickr consumer avargado

The post Scraping Schema Markup for Aggressive Intelligence regarded first on SEOgadget.